|

Size: 1081

Comment:

|

Size: 9964

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| Finger Gestures swipe, pinch and zoom, tapping - Relatively FEW are included, as they are patterns. Need to be identifiable best cases. Weird gestures, or those with too much variability for user type, region, etc. are not included as they are not patterns in the sense we mean. SWYPE? text-input: http://swypeinc.com/ | == Problem == Instead of physical buttons and other input devices mapped to interactions, allow the user to directly interact with on-screen objects and controls. |

| Line 3: | Line 4: |

| Tap Perform action. Go somewhere, select something, etc. Then STOP. People screw this up. See lowish item in this RISKS https://mail.google.com/mail/?shva=1#inbox/12bf56f02bca29b5 pressing selects item, then item on the next screen! | |

| Line 5: | Line 5: |

| Tap & Hold Alternative interaction - Light "right click" - should provide option, not just DO other action- Different from press and hold? | == Solution == Gestures, as a whole, attempt to circumvent the previous state of the art of controls mapped to actions with a direct interface conceit. Items in the screen are assumed to be physical objects which can be "directly" manipulated in realistic (if sometimes practically impossible) ways. |

| Line 7: | Line 8: |

| Drag Moves something. Maybe the whole screen. In the direction (or axis) selected. When not free movement, try to have arrows to indicate axis-of-movement - Cover inertia also. Why not cover 5-way press (ok) and drag (with direction keys) as well??? | Gesture based interfaces should always keep this in mind, and seek to use natural or discoverable behaviors closely coupled to the gestures. Avoid use of arbitrary gestures, or those with little relationship to the interface-as-physical object paradigm. |

| Line 9: | Line 10: |

| Pinch Zoom Two finger apple-like pinch to unzoom, etc. | Technology may limit the availability of some gestures. Two key pitfalls are poor or no drag response, and lack of multi-touch support. Many resistive or beam screens do not support drag actions well, so must be designed to use only click actions, such as buttons instead of draggable scrollbars. |

| Line 11: | Line 12: |

| Axis Rotate Two finger rotate. One is axis, one is rotation. | See the '''[[Directional Entry]]''' pattern for additional details about the use of gestural scrolling, especially in constrained inputs such as single-axis scrolling. {{attachment:OnScreenGestures-One-Finger.png|A selection of single-point gestures and actions.}} == Variations == Gesture, within this pattern, is used to refer to any direct interaction with the screen, including simple momentary tapping. A variety of gestures are available, but not all complex actions are universally mapped to a behavior. Instead, gestures are listed by class of input alone:. * '''Tap''' - The contact point (finger, stylus, or so forth) is used to activate a button, link or other item with no movement, just momentary selection. * '''Press-and-hold''' - The contact point is held down for a brief time, to activate a secondary action. This may be an alternative to a primary (Tap) action, or to activate an optional behavior for a not normally selectable area. See PRESS-AND-HOLD pattern for additional details. * '''One-point Drag''' - See '''[[Directional Entry]]''' for details on interaction, such as behavior for restricted axes of entry. * '''One-axis''' - Scrolling or dragging of items (repositioning) may be performed in only a single axis, either vertical or horizontal. The other axis is locked out. * '''Two-axis''' - Scrolling or dragging of items may be performed in only the vertical and horizontal axes, but usually only one at a time. A typical use would be a series of '''[[Vertical Lists]]''' which are on adjacent screens, and must be scrolled between, in a '''[[Film Strip]]''' manner. * '''Free movement''' - Objects, or the entire page can be moved or scrolled to any position, at any angle. For movement of areas, see the '''[[Infinite Area]]''' pattern. * '''Two-point actions''' - Generally, using two fingers, these use movement to perform gestures on larger areas, where enough room is provided for both points to be on the area to be affected. * '''Both move''' - Two-point gestures are similar to Press-and-hold, in that they are usually used to perform a secondary action, different from a One-point Drag. These are often used to deconflict from single point gestures. For example, a single-finger gesture may select text, a two-finger gesture scrolls the page, and a four-finger gesture from the edge loads an options dialogue. * '''Axis and move''' - One point fixes the item to be rotated, providing an axis, while the other moves about this axis. To be perceived as this action, the axis finger must move as little as a typical Tap action. * '''Multi-point input''' - May use three or four (rarely more) fingers to perform simple gestures, generally impacting the entire device or the entire currently-running application. Often used to deconflict single point drag actions. For example, a single-finger drag may scroll the screen, but a four-finger drag will open a running applications list. {{attachment:OnScreenGestures-TwoFinger.png|A selection of two-point gesture types.|align="right"}} == Interaction Details == Whenever possible, gestures should attempt to simulate physical changes in the device. Pretend the screen is a physical object like a piece of paper, with access only through the viewport. These can even be very roughly prototyped by moving paper on a desk. Drag to move the paper, rotate by fixing it with one finger and moving the other, and so on. Some actions require a bit more imagination. The "pinch" gesture assumes the paper is stretchy and contains an arbitrary amount of information; spreading stretches the paper, showing more detail, pinching squishes the paper, showing less. Intertia can also be used when it would help the interaction, such as scrolling of long lists. This must also follow an arbitrary set of reasonable physical rules, with the initial speed being that of the drag gesture, and deceleration to simulate friction in mechanical systems. Whenever possible, follow these principles, and do not use arbitrary gestures to perform tasks. This may be reflected in the response. For example, if a running-apps list is opened with a four-finger swipe, do not have a '''[[Pop-Up]]''' dialogue simply appear. Instead, have it drag in from the direction, (and at the speed of, the gesture. All '''On-screen Gesuture''' interactions follow a basic protocol: 1. Contact point touches the screen. 1. Any other input, such as dragging, is performed. 1. Screen reflects any live changes, such as displaying a button-down or drag actions. 1. Input is stopped, and the contact point leaves the screen. 1. The action is committed. While many actions will be reflected while the contact point (e.g. finger) is down, they are generally not committed until the point leaves the screen. This can be used to determine when input is unintentional, or gives the user a method to abandon certain actions before committing them. Areas which only accept Tap actions, such as a button, should disregard drag actions larger than a few mm as spurious input. For multi-point gestures, the device generally senses so fast that it can tell which finger makes contact first. When the second finger makes contact, any actions performed to date (including things like drags) will be disregarded and returned to the start position, in preparation for the multi-finger gesture to come. For multi-point gestures, all contacts must be removed within a very short timeframe, or the gesture will convert to a single-point gesture with the remaining contact point. {{attachment:OnScreenGestures-Deconflict.png|Multi-finger gestures can be used to access features which would otherwise be inconflict with primary interactions. Here, a single finger drag scrolls the discussion thread -- a two-finger scroll gesture loads other featues like the running-applications list.|align="right"}} == Presentation Details == Since gestures are not committed until all contact points are removed, a sort of "hover" or "mouseover" state must be included for all links and buttons. While the point is in contact, this should behave exact as a web mouse pointer, and highlight items under the centroid. An additional "submit" state should also be provided to confirm the action has been committed. Position cursors may be used for simple tap behaviors in order to provide additional insights and confirmation of action to the user for more complex actions. See the '''[[Focus & Cursors]]''' pattern for some details. Instead of a conventional cursor, a gesture indicator may be shown adjacent to the contact area, such as a rotate arrow when rotating. When on-screen controls allow indirect input (via '''[[Form Selections]]''') of the same action as the gesture, these should be changed to reflect the current state. Examples are scroll bars moving as a list is dragged, or an angle change form displaying the current angle in degrees as a rotate action is performed. All status updating must be "live," or so close to real time the user cannot see the difference. == Antipatterns == Use caution with design, and test the interface with real users in real situations to avoid overly-precise input parameters. If Tap actions are only accepted within an overly-small area, the user will have trouble providing input. Do not commit actions while the contact point is still on the screen. At best, if additional action are blocked to prevent accidental second input, users may be confused as to how to continue. At worst, no actions are blocked, and whatever is under the contact point will be selected or acted upon without deliberate user input. Devices without multi-touch support will either accept the first input, or (depending on the technology) determine a centroid based on all contact areas. Since touch devices do not generally display a cursor, this makes the device unusable. Instead, block all inputs whose total contact area is much larger than a typical fingertip (larger than about 25 mm). == Examples == From RISKS 26.19: Subject: Voting machines with incredibly poorly written software The video attached to this "voter problems" story actually contains something more disturbing than the allegations in the story itself: http://www.fox5vegas.com/news/25511115/detail.html To wit: the person in charge of the polls says that there is no problem with the fact that the touch screen sensing a finger touching "English" accepts that input and proceeds to the next screen (where the ballot is), and if the person who just selected English hasn't removed their finger "quickly enough", whatever candidates name was under the same section of screen is instantaneously checked off, inadvertently. - Dr. Philip Listowsky, Computer Science Department, Yeshiva University NY ''Not sure if this stays here, or is an actual referable item for the reference list.'' |

Problem

Instead of physical buttons and other input devices mapped to interactions, allow the user to directly interact with on-screen objects and controls.

Solution

Gestures, as a whole, attempt to circumvent the previous state of the art of controls mapped to actions with a direct interface conceit. Items in the screen are assumed to be physical objects which can be "directly" manipulated in realistic (if sometimes practically impossible) ways.

Gesture based interfaces should always keep this in mind, and seek to use natural or discoverable behaviors closely coupled to the gestures. Avoid use of arbitrary gestures, or those with little relationship to the interface-as-physical object paradigm.

Technology may limit the availability of some gestures. Two key pitfalls are poor or no drag response, and lack of multi-touch support. Many resistive or beam screens do not support drag actions well, so must be designed to use only click actions, such as buttons instead of draggable scrollbars.

See the Directional Entry pattern for additional details about the use of gestural scrolling, especially in constrained inputs such as single-axis scrolling.

Variations

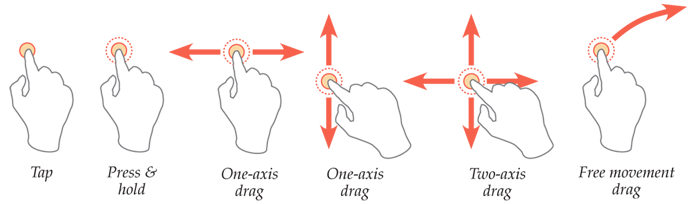

Gesture, within this pattern, is used to refer to any direct interaction with the screen, including simple momentary tapping. A variety of gestures are available, but not all complex actions are universally mapped to a behavior. Instead, gestures are listed by class of input alone:.

Tap - The contact point (finger, stylus, or so forth) is used to activate a button, link or other item with no movement, just momentary selection.

Press-and-hold - The contact point is held down for a brief time, to activate a secondary action. This may be an alternative to a primary (Tap) action, or to activate an optional behavior for a not normally selectable area. See PRESS-AND-HOLD pattern for additional details.

One-point Drag - See Directional Entry for details on interaction, such as behavior for restricted axes of entry.

One-axis - Scrolling or dragging of items (repositioning) may be performed in only a single axis, either vertical or horizontal. The other axis is locked out.

Two-axis - Scrolling or dragging of items may be performed in only the vertical and horizontal axes, but usually only one at a time. A typical use would be a series of Vertical Lists which are on adjacent screens, and must be scrolled between, in a Film Strip manner.

Free movement - Objects, or the entire page can be moved or scrolled to any position, at any angle. For movement of areas, see the Infinite Area pattern.

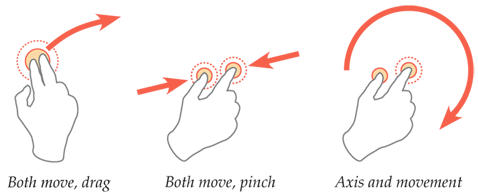

Two-point actions - Generally, using two fingers, these use movement to perform gestures on larger areas, where enough room is provided for both points to be on the area to be affected.

Both move - Two-point gestures are similar to Press-and-hold, in that they are usually used to perform a secondary action, different from a One-point Drag. These are often used to deconflict from single point gestures. For example, a single-finger gesture may select text, a two-finger gesture scrolls the page, and a four-finger gesture from the edge loads an options dialogue.

Axis and move - One point fixes the item to be rotated, providing an axis, while the other moves about this axis. To be perceived as this action, the axis finger must move as little as a typical Tap action.

Multi-point input - May use three or four (rarely more) fingers to perform simple gestures, generally impacting the entire device or the entire currently-running application. Often used to deconflict single point drag actions. For example, a single-finger drag may scroll the screen, but a four-finger drag will open a running applications list.

Interaction Details

Whenever possible, gestures should attempt to simulate physical changes in the device. Pretend the screen is a physical object like a piece of paper, with access only through the viewport. These can even be very roughly prototyped by moving paper on a desk. Drag to move the paper, rotate by fixing it with one finger and moving the other, and so on.

Some actions require a bit more imagination. The "pinch" gesture assumes the paper is stretchy and contains an arbitrary amount of information; spreading stretches the paper, showing more detail, pinching squishes the paper, showing less.

Intertia can also be used when it would help the interaction, such as scrolling of long lists. This must also follow an arbitrary set of reasonable physical rules, with the initial speed being that of the drag gesture, and deceleration to simulate friction in mechanical systems.

Whenever possible, follow these principles, and do not use arbitrary gestures to perform tasks. This may be reflected in the response. For example, if a running-apps list is opened with a four-finger swipe, do not have a Pop-Up dialogue simply appear. Instead, have it drag in from the direction, (and at the speed of, the gesture.

All On-screen Gesuture interactions follow a basic protocol:

- Contact point touches the screen.

- Any other input, such as dragging, is performed.

- Screen reflects any live changes, such as displaying a button-down or drag actions.

- Input is stopped, and the contact point leaves the screen.

- The action is committed.

While many actions will be reflected while the contact point (e.g. finger) is down, they are generally not committed until the point leaves the screen. This can be used to determine when input is unintentional, or gives the user a method to abandon certain actions before committing them. Areas which only accept Tap actions, such as a button, should disregard drag actions larger than a few mm as spurious input.

For multi-point gestures, the device generally senses so fast that it can tell which finger makes contact first. When the second finger makes contact, any actions performed to date (including things like drags) will be disregarded and returned to the start position, in preparation for the multi-finger gesture to come.

For multi-point gestures, all contacts must be removed within a very short timeframe, or the gesture will convert to a single-point gesture with the remaining contact point.

Presentation Details

Since gestures are not committed until all contact points are removed, a sort of "hover" or "mouseover" state must be included for all links and buttons. While the point is in contact, this should behave exact as a web mouse pointer, and highlight items under the centroid. An additional "submit" state should also be provided to confirm the action has been committed.

Position cursors may be used for simple tap behaviors in order to provide additional insights and confirmation of action to the user for more complex actions. See the Focus & Cursors pattern for some details.

Instead of a conventional cursor, a gesture indicator may be shown adjacent to the contact area, such as a rotate arrow when rotating.

When on-screen controls allow indirect input (via Form Selections) of the same action as the gesture, these should be changed to reflect the current state. Examples are scroll bars moving as a list is dragged, or an angle change form displaying the current angle in degrees as a rotate action is performed. All status updating must be "live," or so close to real time the user cannot see the difference.

Antipatterns

Use caution with design, and test the interface with real users in real situations to avoid overly-precise input parameters. If Tap actions are only accepted within an overly-small area, the user will have trouble providing input.

Do not commit actions while the contact point is still on the screen. At best, if additional action are blocked to prevent accidental second input, users may be confused as to how to continue. At worst, no actions are blocked, and whatever is under the contact point will be selected or acted upon without deliberate user input.

Devices without multi-touch support will either accept the first input, or (depending on the technology) determine a centroid based on all contact areas. Since touch devices do not generally display a cursor, this makes the device unusable. Instead, block all inputs whose total contact area is much larger than a typical fingertip (larger than about 25 mm).

Examples

From RISKS 26.19: Subject: Voting machines with incredibly poorly written software

The video attached to this "voter problems" story actually contains something more disturbing than the allegations in the story itself: http://www.fox5vegas.com/news/25511115/detail.html

To wit: the person in charge of the polls says that there is no problem with the fact that the touch screen sensing a finger touching "English" accepts that input and proceeds to the next screen (where the ballot is), and if the person who just selected English hasn't removed their finger "quickly enough", whatever candidates name was under the same section of screen is instantaneously checked off, inadvertently. - Dr. Philip Listowsky, Computer Science Department, Yeshiva University NY

Not sure if this stays here, or is an actual referable item for the reference list.