|

Size: 17242

Comment:

|

Size: 17457

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 3: | Line 3: |

| It’s pitch black outside. The air is cold and wet, yet carries a lingering sweet smell. Sporadic beams of light dance in the night, casting an eerie glow on the landscape. Giggles, whispers, and even the occasional scream carry through the streets, a reminder that others are about this night. | It’s pitch-black outside. The air is cold and wet, yet it carries a lingering sweet smell. Sporadic beams of light dance in the night, casting an eerie glow on the landscape. Giggles, whispers, and even the occasional scream carry through the streets, a reminder that others are out and about. |

| Line 5: | Line 5: |

| Through the eyes of one of these figures, a house is seen. The figure changes course and heads in the direction of the home. The home is unlit, and looks unoccupied. In one hand, the figure holds a large sack, the other yields a blunt sword. | Through the eyes of one of these figures, a house is seen. The figure changes course and heads in the direction of the house. The house is unlit and looks unoccupied. In one hand the figure holds a large sack; the other yields a blunt sword. |

| Line 7: | Line 7: |

| As the figure makes his way now up the porch and to the door, the hand with the sword points forward. The hand is not human. It’s about twice as big a man’s hand. Coarse, dark fur covers its skin, while jagged claws extend from the aged fingers. | As the figure makes his way up the porch and to the door, the hand that is holding the sword points forward. The hand is not a human’s hand. It’s about twice as big as a man’s hand. Coarse, dark fur covers its skin, while jagged claws extend from the aged fingers. |

| Line 11: | Line 11: |

| A chime echoes. The front door opens. The man who opens the door smiles happily while looking down, hardly frightened by the four foot tall, hairy monster screaming “Trick or Treat!" | A chime echoes. The front door opens. The man who opens the door smiles happily while looking down, hardly frightened by the four-foot tall, hairy monster screaming “Trick or treat!” |

| Line 14: | Line 14: |

| == That sounds like a great idea == Take a moment to catch your breath, slow your heart beat down. Despite my enjoyment of Halloween, it’s not my focus for the remainder of this chapter. However, the doorbell that so frequently sounds during that annual night is. |

== That Sounds Like a Great Idea == Take a moment to catch your breath and slow your heartbeat. Despite my enjoyment of Halloween, it’s not my focus for the remainder of this chapter. However, the doorbell that so frequently sounds during that annual holiday is. |

| Line 17: | Line 17: |

| The doorbell is an outstanding example of an effective interactive control. If a 10 year old dressed as a monster with oversized, latex hands, in the dark can use it effortlessly, and anyone listening in the house (even the family dog) instantly understands what it means, it must work well. | The doorbell is an outstanding example of an effective interactive control. If a 10-year-old dressed as a monster with oversize latex hands can use it effortlessly in the dark, and anyone listening in the house (even the family dog) instantly understands what it means, it must work well! |

| Line 19: | Line 19: |

| Let’s examine why the doorbell is an effective control using Donald Norman’s Interface Model. | Let’s examine why the doorbell is an effective control, using Donald Norman’s Interaction Model. |

| Line 22: | Line 22: |

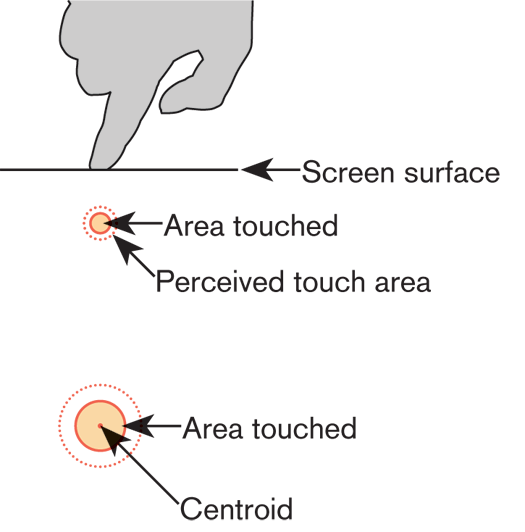

| == Make it Visible == {{attachment:GICintro-Centroid.png|Touch operates over an area, but defines the contact as a point. Understand how touch works to use it correctly.|align=right}} Any control needs to be visible when an action or state change requires its presence. The doorbell is an example of an “always present” control. There aren’t that many components on the door anyway (door knob, lock, windows and peepholes, maybe a knocker), so the doorbell is easy to locate. In many cases, the doorbell is illuminated, making it visible, and enticing, when lighting conditions are poor. |

=== Make it Visible === {{attachment:GICintro-Centroid.png|Figure 10-1. Touch operates over an area, but defines the contact as a point. Understand how touch works to use it correctly.}} Any control needs to be visible when an action or state change requires its presence. The doorbell is an example of an “always present” control. There aren’t that many components on the door anyway (doorknob, lock, windows and peepholes, maybe a knocker), so the doorbell is easy to locate. In many cases the doorbell is illuminated, making it visible and enticing when lighting conditions are poor. |

| Line 26: | Line 26: |

| Cultural norms, and prior experiences, have developed a mental model in which we expect the doorbell to be placed in a specific location -- within sight, within easy reach, and to the side of the door. So our scary monster was quickly able to detect the location of the doorbell from even his relatively more limited prior knowledge, as well as see it in the dark. | Cultural norms and prior experiences have developed a mental model in which we expect the doorbell to be placed in a specific location—within sight, within easy reach, and to the side of the door. So our scary monster was quickly able to detect the location of the doorbell from even his relatively more limited prior knowledge, as well as see it in the dark. |

| Line 28: | Line 28: |

| Having an object visible doesn’t have to mean it can be seen. It can also mean that the object is detected. Consider someone who is visually impaired. They still have the prior knowledge that the doorbell is located on the side within eye and reach level. It’s shape is uniquely tactile, making it easy to detect when fingers or hands make contact. Most doorbells are in notably raised housing, and while it's not clear if this is an artifact of engineering or not, it serves to make them easy to find and identify by touch as well. | Having an object visible doesn’t have to mean it can be seen. It can also mean the object is detected. Consider someone who is visually impaired. She still has the prior knowledge that the doorbell is located on the side of the door, at eye level and within reach. Its shape is uniquely tactile, making it easy to detect when fingers or hands make contact with it. Most doorbells are in notably raised housing, and although it’s not clear if this is an artifact of engineering, it serves to make the doorbell easy to find and identify by touch as well. |

| Line 30: | Line 30: |

| In mobile devices, this is a very important principle. Many times, we don’t have the opportunity to always look at the display for a button on the screen, but we can tactilely feel the different hardware keys. Consider people playing video games. Their attention is on the TV, not the device controller, yet they are easily able to push he correct buttons during game play. | In mobile devices this is a very important principle. Many times we don’t have the opportunity to always look at the display for a button on the screen, but we can feel the different hardware keys. Consider people playing video games. Their attention is on the TV, not the device controller, yet they are easily able to push the correct buttons during game play. |

| Line 33: | Line 33: |

| == Mapping == The term "mapping" within interactive contexts describes the relationship established between two objects, and implies how well users understand their connection. This relates to the mental model a user builds of the control and its expected outcome. When we see the doorbell, we have learned that when pushed, it will sound a chime that can be heard from the inside of the house or building to notify the person inside, that someone is waiting outside by the door. |

=== Mapping === The term ''mapping'' within interactive contexts describes the relationship established be- tween two objects, and implies how well users understand their connection. This relates to the mental model a user builds of the control and its expected outcome. When we see the doorbell we have learned that when we push it, it will sound a chime that can be heard from within the house or building to notify the person inside that someone is waiting outside by the door. |

| Line 36: | Line 36: |

| Our ten year old scary monster, mapped that pushing and sounding of the doorbell will both notify the people inside that a trick or treater is there and that he will receive a handful of free candy. | Our 10-year-old monster mapped that pushing and sounding of the doorbell will both notify the people inside that a trick-or-treater is there and that he will receive a handful of free candy. |

| Line 38: | Line 38: |

| So mapping relates heavily on context. If the boy pushes the doorbell on a night other than Halloween, the outcome will differ. The sounding chime will remain which indicates a person is waiting outside, but the likelihood of someone there to answer and even more, to hand out candy, is much more unlikely. | So mapping relates heavily on context. If the boy pushes the doorbell on a night other than Halloween, the outcome will differ. The sounding chime will remain, which indicates a person is waiting outside, but the likelihood of someone being there to answer and, what’s more, to hand out candy, is much less great. |

| Line 40: | Line 40: |

| On a mobile device, controls that resemble our cultural standards are going to be well understood. For example, let’s relate volume with a control. In the context of a phone call, pushing the volume control is expected to either increase or reduce the volume levels. However, if the context changes, i.e, on the idle screen, that button may provide additional functionality, bringing up a modal pop-up to control screen brightness, and volume levels. Just like call volume, pushing up will still perform an increase and pushing down will perform a decrease in those levels. | On a mobile device, controls that resemble our cultural standards are going to be well understood. For example, let’s relate volume with a control. In the context of a phone call, pushing the volume control is expected to either increase or decrease the volume level. However, if the context changes, for example, on the Idle screen, that button may provide additional functionality, bringing up a modal pop up to control screen brightness and volume levels. Just like call volume, pushing up will still perform an increase and pushing down will perform a decrease in those levels. |

| Line 42: | Line 42: |

| But you must adhere to common mapping principles related to your user’s understanding of control display compatibility. On the iPhone, in order to take a screen shot, you must hold the power button at the same time as the home button. This type interaction is very confusing, and impossible to discover unless you read the manual (or otherwise look it up, or are told), and is hard to remember as the controls have no relation to the functional result. Onscreen and kinesthetic gestures can be problematic too if the action isn’t related to the type of reaction expected. Use natural body movements that mimic the way the device should act. Do not use arbitrary or uncommon gestures. |

But you must adhere to common mapping principles related to your user’s understanding of control display compatibility. On the iPhone, in order to take a screenshot you must hold the power button at the same time as the home button. This type of interaction is very confusing, is impossible to discover unless you read the manual (or otherwise look it up, or are told), and is hard to remember as the controls have no relation to the functional result. On-screen and kinesthetic gestures can be problematic too if the action isn’t related to the type of reaction expected. Use natural body movements that mimic the way the device should act. Do not use arbitrary or uncommon gestures. |

| Line 47: | Line 46: |

| == Affordances == Affordances describe that an object’s function can be understood based on its properties. The doorbell extends outward, can be round or rectangular, and has a target touch size larger enough for a finger to push. Its characteristics afford contact and pushing. |

=== Affordances === ''Affordances'' describe that an object’s function can be understood based on its properties. The doorbell extends outward, can be round or rectangular, and has a target touch size large enough for a finger to push. Its characteristics afford contact and pushing. |

| Line 50: | Line 49: |

| On mobile devices, physical keys that extend outward or recessed inward afford pushing, rotating, or sliding. Keys that are grouped in proximity afford a common relationship between the two of them, many times polar functionality. For example, 4 way keys afford directional scrolling while and the center key affords selection. | On mobile devices, physical keys that extend outward or are recessed inward afford pushing, rotating, or sliding. Keys that are grouped in proximity afford a common relationship, many times a polar functionality. For example, four-way keys afford directional scrolling while the center key affords selection. |

| Line 52: | Line 51: |

| Yes, affordances are just like other understandings of interactive controls and are learned. Very few of these are truly "intuitive," (meaning innately understandable) despite the common use of the term, and may vary by the user's background such as regional or cultural differences. | Yes, affordances are just like other understandings of interactive controls and are learned. Very few of these are truly ''intuitive'' (meaning innately understandable) despite the common use of the term, and may vary by the user’s background, including regional or cul- tural differences. |

| Line 55: | Line 54: |

| == Provide Constraints == {{attachment:GeneralInteractiveIntro-POS.png|Pen tablets are reasonably common in industry, for sales and checkout (as shown here), and for various types of data gathering or interchange. The interaction methods allow the user to remain standing, or use the device in situations where a laptop would take too much effort or attention. Touch is already making some headway into these markets, and gesture interfaces may be even more suitable for some of the dirty and dangerous fields.|align="right"}} |

=== Provide Constraints === [[http://www.flickr.com/photos/shoobe01/6501536679/|{{attachment:GeneralInteractiveIntro-POS.png|Figure 10-2. Pen tablets are reasonably common in industry, for sales and checkout (as shown here), and for various types of data gathering or interchange. The interaction methods allow the user to remain standing, or to use the device in situations where a laptop would take too much effort or attention. Touch is already making some headway into these markets, and gesture interfaces may be even more suitable for some fields that are dirty and/or dangerous.}}]] |

| Line 58: | Line 57: |

| Restrictions on behavior can be both natural and cultural. They can be both positive and negative, and they can prevent undesired results such as loss of data, or unnecessary state changes. | Restrictions on behavior can be both natural and cultural. They can be both positive and negative, and they can prevent undesired results such as loss of data, or unnecessary state changes. |

| Line 60: | Line 59: |

| Our doorbell from above could only be pushed not pulled (in fact, a style of older doorbell did require pulling a significant distance). The distance which the button could be offset is restricted by the mechanics of the device; it cannot be moved any direction except along the "z" axis. | Our doorbell could only be pushed and not pulled (in fact, a style of older doorbell did require pulling a significant distance). The distance which the button could be offset is restricted by the mechanics of the device; it cannot be moved in any direction except along the z-axis. |

| Line 62: | Line 61: |

| Despite the small surface and touch size, the button can still be pressed down by a finger, a entire hand or any object, even one much larger than the button’s surface. In this context, that lack of constraint was beneficial. A user doesn’t have to be entirely accurate using the tip of the finger to access the device. Our scary monster, with huge latex hands holding a sword was still able to push the button down, allowing for a quick interaction. More commonly, the doorbell can be pushed by someone in mittens, or by an elbow when carrying the groceries in. | Despite the small surface and touch size, the button can still be pressed down with a finger, an entire hand, or any object, even one much larger than the button’s surface. In this context, that lack of constraint was beneficial. A user doesn’t have to be entirely accurate using the tip of the finger to access the device (see Figure 10-1). Our trick-or-treater, with huge latex hands holding a sword, was still able to push the button down, allowing for a quick interaction. More commonly, the doorbell can be pushed by someone wearing mittens or by an elbow if the person is carrying groceries. |

| Line 66: | Line 65: |

| * '''Contact Pressure''' – Having a threshold of contact pressure that initiates the control should be used to prevent accidental input. Touch screens and similar controls without perceptible movement will have a lower threshold, as opposed to hardware keys which move to make contact. * '''Time of contact'''– Similar to contact pressure, requiring keys remain pressed longer may provide a useful constraint to accessing buttons that can lose user-generated data. Pushbutton power keys, for example, do not immediately turn devices on or off but must be held for a few seconds. * '''Axis of control''' – The amount of directional movement can be restricted to a single axis or a particular angle or movement. Gestural interactive controls must use appropriate constraints so they are not activated out of context, even though the user's finger is not constrained itself. Unlocking an idle screen may require a one-axis drag, to access additional features on the device. This is not different for hardware keys; the user may "nudge" controls by using scroll controls at an angle. Only the input hardware or sensing may be constrained. * '''Size of control''' – The ability to interact with a button can heavily depend on it’s physical size and its proximity to other buttons or hardware elements. With on-screen targets, careful consideration needs to be used when designing UIs that have these interactive controls. Small touch targets must have larger touch areas, and not be too close to a raised bezel. Refer to the General Touch Interaction Guidelines found earlier in the Input & Output Section. |

''Contact pressure' This means having a threshold of contact pressure that initiates that the control should be used to prevent accidental input. Touchscreens and similar controls without perceptible movement will have a lower threshold, as opposed to hardware keys which move to make contact. |

| Line 71: | Line 68: |

| == Use Feedback == {{attachment:GeneralInteractiveIntro-Together.png|Working or playing in groups encourages other types of interactions, like the use of remotes, and gestural interfaces. Television, games, and collaboration all have similar needs based on the distance from the screen, and the sharing.|align="right"}} |

''Time of contact'' Similar to contact pressure, requiring that keys remain pressed for longer periods may provide a useful constraint to accessing buttons that can lose user-generated data. Pushbutton power keys, for example, do not immediately turn devices on or off but must be held for a few seconds. |

| Line 74: | Line 71: |

| Feedback describes the immediate perceived result of an interaction. It confirms that action took place and presents us with more information. Without feedback, the user may believe the action never took place leading to frustration and repetitive input attempts. The pushed doorbell provides immediate audio feedback that can be heard from outside as well as inside the house. Illuminated doorbells also darken while being pressed, providing additional feedback to the user as well as confirmation in loud environments, for more sound-proof houses or for users with auditory deficits. | ''Axis of control'' The amount of directional movement can be restricted to a single axis or a particular angle or movement. Gestural interactive controls must use appropriate constraints so that they are not activated out of context, even though the user’s finger is not constrained itself. Unlocking an Idle screen may require a one-axis drag to access additional features on the device. This is not different for hardware keys; the user may “nudge” controls by using scroll controls at an angle. Only the input hardware or sensing may be constrained. |

| Line 76: | Line 74: |

| Use feedback properly. Unlike the doorbell's immediate indicator of of action, an elevator call or floor button (both work the same, as they should) remains illuminated as a request-for-service, until it has been fulfilled. As an anti-pattern, crosswalk request buttons generally provide no feedback, or beep and illuminate as pressed. There is no assurance the request was received, or is properly queued. | ''Size of control'' The ability to interact with a button can heavily depend on its physical size and its proximity to other buttons or hardware elements. With on-screen targets, you must carefully consider this when designing UIs that have these interactive controls. Small touch targets must have larger touch areas, and must not be too close to a raised bezel. Consider as well the use of other sensors, and how [[Kinesthetic Gestures]] can change the effective control size, such as for the card reader in Figure 10-2. Refer to the section "[[General Touch Interaction Guidelines]]" in Appendix D. |

| Line 78: | Line 77: |

| On mobile devices, when we click, select an object, or move the device, we expect an immediate response. With general interactive controls, feedback is experienced in multiple ways. A single object or entire image may change shape, size, orientation, color, or position. Devices that use accelerometers provide immediate feedback showing page flips, rotations, expand, and slide. | === Use Feedback === ''Feedback'' describes the immediate perceived result of an interaction. It confirms that action took place and presents us with more information. Without feedback, the user may believe the action never took place, leading to frustration and repetitive input attempts. The pushed doorbell provides immediate audio feedback that can be heard from outside as well as inside the house. Illuminated doorbells also darken while being pressed, providing additional feedback to the user as well as confirmation in loud environments, for more soundproof houses, or for users with auditory deficits. Use feedback properly. Unlike the doorbell’s immediate indicator of an action, an elevator call or floor button (both work the same, as they should) remains illuminated as a request- for-service, until it has been fulfilled. As an antipattern, crosswalk request buttons generally provide no feedback, or beep and illuminate as pressed. There is no assurance that the request was received, or is properly queued. On mobile devices, when we click, select an object, or move the device we expect an im- mediate response. With general interactive controls, feedback is experienced in multiple ways. A single object or entire image may change shape, size, orientation, color, or position. Devices that use accelerometers provide immediate feedback showing page flips, rotations, expansion, and sliding. |

| Line 82: | Line 89: |

| There are a growing number of devices today that are using gestural interactive controls as the primary method input. We can expect smartphones, tablets, and game systems to have some level of these types of controls. | A growing number of devices today are using gestural interactive controls as the primary input method. We can expect smartphones, tablets, and game systems to have some level of these types of controls. |

| Line 84: | Line 91: |

| Gestural interfaces have a unique set of guidelines that other interactive controls need not follow. | Gestural interfaces have a unique set of guidelines that other interactive controls need not follow: * One of the most important rules of gestural controls is that they need to resemble natural human behavior. This means the device behavior must match the type of gestural behavior the human is carrying out. * Simple tasks should have simple gestures. A great colleague and friend of mine who is visually impaired was discussing her opinions on gestural devices. She provided me an example of how gestures actually make her interactions quite useful. If she types an entire page, and finds later that she is unhappy with what she wrote, she can shake her device to erase the entire page. A simple shake won’t activate this feature (constraint), so she is confident she won’t lose her data accidentally. But rather than her having to delete the text line by line, this simple gesture quickly allows her to perform an easy task. |

| Line 86: | Line 95: |

| * One of the most important rules of gestural controls is that they need to resemble natural human behavior. This means that device behavior must match the type of gestural behavior the human is carrying out. * Simple tasks should have simple gestures. A great colleague and friend of mine who is visually impaired was discussing her opinions on gestural devices. She provided me an example of how gestures actually make her interactions quite useful. If she typed up an entire page, and finds later that she is unhappy with what she wrote, she can shake her device to erase the entire page. A simple shake won’t active this feature (constraint) so she is confident she won’t lose her data accidentally. But rather than her having to delete line by line, this simple gesture quickly allows her to perform an easy task. |

Dan Saffer (Saffer 2009) points out five reasons to use interactive gestures: ''More natural interactions'' People naturally interact with and manipulate physical objects. |

| Line 89: | Line 99: |

| Dan Saffer, author of ''Designing Gestural Interfaces'' (Saffer, 2009) points out five reasons to use interactive gestures: | ''Less cumbersome or visible hardware'' Mobile devices are everywhere: in our pockets and hands, on tables and storefront walls, and in kiosks (see Figure 10-3). Gestural controls don’t rely on physical com- ponents such as keyboards and mice to manipulate the device. |

| Line 91: | Line 102: |

| 1. '''More natural interactions''' -- People naturally interact and manipulate physical objects. 2. '''Less cumbersome or visible hardware''' -- Mobile devices are everywhere: in our pockets, hands, tables, on storefront walls, or kiosks. Gestural controls don’t rely on the large physical components such as keyboards, and mice to manipulate the device. 3. '''More flexibility''' -- Using sensors that can detect our body movements remove our hand-eye dependence and coordination normally required on small mobile screens. 4. '''More nuance''' -- A lot of human gestures are related to subtle emotional forms of communication. Like winking, smiling, rolling our eyes. The nuance gestures have yet to be fully explored in today’s devices, leaving an area of opportunity in user experience. 5. '''More fun''' -- Today's gesture based games encourage full body movement. Not only is it fun, it provokes a fully-engaging social context. |

''More flexibility'' Using sensors that can detect our body movements removes our hand-eye depen- dence and coordination normally required on small mobile screens. Patterns for General Interactive Controls 319 |

| Line 97: | Line 106: |

| ''More nuance'' A lot of human gestures are related to subtle emotional forms of communication, such as winking, smiling, and rolling our eyes. The nuance gestures have yet to be fully explored in today’s devices, leaving an area of opportunity in user experience. ''More fun'' Today’s gesture-based games encourage full-body movement. Not only is this fun, but it also provokes a fully engaging social context. [[http://www.flickr.com/photos/shoobe01/6501540889/in/photostream/|{{attachment:GeneralInteractiveIntro-Together.png|Figure 10-3. Working or playing in groups encourages other types of interactions, such as the use of remotes and gestural interfaces.}}]] |

|

| Line 100: | Line 116: |

| The patterns within this chapter describe how General Interactive Controls can be used to initiate various forms of interaction on mobile devices. The following patterns in the chapter will be discussed. | The patterns in this chapter describe how you can use general interactive controls to initiate various forms of interaction on mobile devices: |

| Line 102: | Line 118: |

| [[Directional Entry]] -– Controls used to select and otherwise interact with items on the screen, a regular, predictable method of input must be made available. All mobile interactive devices use list and other paradigms that require indicating position within the viewport. | ''[[Directional Entry]]'' With controls used to select and otherwise interact with items on the screen, a regu- lar, predictable method of input must be made available. All mobile interactive devices use lists and other paradigms that require indicating position within the viewport. |

| Line 104: | Line 121: |

| [[Press-and-hold]] -– This mode switch selection function can be used to initiate an alternative interaction. | ''[[Press-and-Hold]]'' This mode switch selection function can be used to initiate an alternative interaction. |

| Line 106: | Line 124: |

| [[Focus & Cursors]] -– The position of input behaviors must be clearly communicated to the user. Within the screen, inputs may often occur at any number of locations, and especially for text entry the current insertion point must be clearly communicated at all times. | ''[[Focus & Cursors]]'' The position of input behaviors must be clearly communicated to the user. Within the screen, inputs may often occur at any number of locations, and especially for text entry the current insertion point must be clearly communicated at all times. |

| Line 108: | Line 127: |

| [[Other Hardware Keys]] -– Functions on the device, and in the interface, are controlled by a series of keys arrayed around the periphery of the device. Users must be able to understand, learn and control their behavior. | ''[[Other Hardware Keys]]'' Functions on the device and in the interface are controlled by a series of keys arrayed around the periphery of the device. Users must be able to understand, learn, and control their behavior. |

| Line 110: | Line 130: |

| [[Accesskeys]] -– Provide one-click access to functions and features of the handset, application or site for any device with a hardware keyboard or keypad. | ''[[Accesskeys]]'' These provide one-click access to functions and features of the handset, application, or site for any device with a hardware keyboard or keypad. |

| Line 112: | Line 133: |

| [[Dialer]] -– Numeric entry for the dialer application or mode to access the voice network varies from other entry methods, and has developed common methods of operation that users are accustomed to. | ''[[Dialer]]'' Numeric entry for the dialer application or mode to access the voice network varies from other entry methods, and has developed common methods of operation that users are accustomed to. |

| Line 114: | Line 136: |

| [[On-screen Gestures]] -– Instead of physical buttons and other input devices mapped to interactions, these allow the user to directly interact with on-screen objects and controls. | ''[[On-screen Gestures]]'' Instead of physical buttons and other input devices mapped to interactions, these allow the user to directly interact with on-screen objects and controls. |

| Line 116: | Line 139: |

| [[Kinesthetic Gestures]] -– Instead of physical buttons and other input devices mapped to interactions, these allow the user to directly interact with on-screen objects and controls using body movement. | ''[[Kinesthetic Gestures]]'' Instead of physical buttons and other input devices mapped to interactions, these allow the user to directly interact with on-screen objects and controls using body movement. |

| Line 118: | Line 142: |

| [[Remote Gestures]] -– A handheld remote device, or the user alone, is the best, only or most immediate method to communicate with another, nearby device with display. | ''[[Remote Gestures]]'' A handheld remote device, or the user alone, is the best, only, or most immediate method to communicate with another, nearby device with a display. |

Darkness

It’s pitch-black outside. The air is cold and wet, yet it carries a lingering sweet smell. Sporadic beams of light dance in the night, casting an eerie glow on the landscape. Giggles, whispers, and even the occasional scream carry through the streets, a reminder that others are out and about.

Through the eyes of one of these figures, a house is seen. The figure changes course and heads in the direction of the house. The house is unlit and looks unoccupied. In one hand the figure holds a large sack; the other yields a blunt sword.

As the figure makes his way up the porch and to the door, the hand that is holding the sword points forward. The hand is not a human’s hand. It’s about twice as big as a man’s hand. Coarse, dark fur covers its skin, while jagged claws extend from the aged fingers.

The creature now stands directly in front of the door, its purpose clear. It only wants one thing and that thing remains inside the house. With a blast of energy the hand with the sword raises, lunges, and slams into the house.

A chime echoes. The front door opens. The man who opens the door smiles happily while looking down, hardly frightened by the four-foot tall, hairy monster screaming “Trick or treat!”

That Sounds Like a Great Idea

Take a moment to catch your breath and slow your heartbeat. Despite my enjoyment of Halloween, it’s not my focus for the remainder of this chapter. However, the doorbell that so frequently sounds during that annual holiday is.

The doorbell is an outstanding example of an effective interactive control. If a 10-year-old dressed as a monster with oversize latex hands can use it effortlessly in the dark, and anyone listening in the house (even the family dog) instantly understands what it means, it must work well!

Let’s examine why the doorbell is an effective control, using Donald Norman’s Interaction Model.

Make it Visible

Any control needs to be visible when an action or state change requires its presence. The doorbell is an example of an “always present” control. There aren’t that many components on the door anyway (doorknob, lock, windows and peepholes, maybe a knocker), so the doorbell is easy to locate. In many cases the doorbell is illuminated, making it visible and enticing when lighting conditions are poor.

Any control needs to be visible when an action or state change requires its presence. The doorbell is an example of an “always present” control. There aren’t that many components on the door anyway (doorknob, lock, windows and peepholes, maybe a knocker), so the doorbell is easy to locate. In many cases the doorbell is illuminated, making it visible and enticing when lighting conditions are poor.

Cultural norms and prior experiences have developed a mental model in which we expect the doorbell to be placed in a specific location—within sight, within easy reach, and to the side of the door. So our scary monster was quickly able to detect the location of the doorbell from even his relatively more limited prior knowledge, as well as see it in the dark.

Having an object visible doesn’t have to mean it can be seen. It can also mean the object is detected. Consider someone who is visually impaired. She still has the prior knowledge that the doorbell is located on the side of the door, at eye level and within reach. Its shape is uniquely tactile, making it easy to detect when fingers or hands make contact with it. Most doorbells are in notably raised housing, and although it’s not clear if this is an artifact of engineering, it serves to make the doorbell easy to find and identify by touch as well.

In mobile devices this is a very important principle. Many times we don’t have the opportunity to always look at the display for a button on the screen, but we can feel the different hardware keys. Consider people playing video games. Their attention is on the TV, not the device controller, yet they are easily able to push the correct buttons during game play.

Mapping

The term mapping within interactive contexts describes the relationship established be- tween two objects, and implies how well users understand their connection. This relates to the mental model a user builds of the control and its expected outcome. When we see the doorbell we have learned that when we push it, it will sound a chime that can be heard from within the house or building to notify the person inside that someone is waiting outside by the door.

Our 10-year-old monster mapped that pushing and sounding of the doorbell will both notify the people inside that a trick-or-treater is there and that he will receive a handful of free candy.

So mapping relates heavily on context. If the boy pushes the doorbell on a night other than Halloween, the outcome will differ. The sounding chime will remain, which indicates a person is waiting outside, but the likelihood of someone being there to answer and, what’s more, to hand out candy, is much less great.

On a mobile device, controls that resemble our cultural standards are going to be well understood. For example, let’s relate volume with a control. In the context of a phone call, pushing the volume control is expected to either increase or decrease the volume level. However, if the context changes, for example, on the Idle screen, that button may provide additional functionality, bringing up a modal pop up to control screen brightness and volume levels. Just like call volume, pushing up will still perform an increase and pushing down will perform a decrease in those levels.

But you must adhere to common mapping principles related to your user’s understanding of control display compatibility. On the iPhone, in order to take a screenshot you must hold the power button at the same time as the home button. This type of interaction is very confusing, is impossible to discover unless you read the manual (or otherwise look it up, or are told), and is hard to remember as the controls have no relation to the functional result. On-screen and kinesthetic gestures can be problematic too if the action isn’t related to the type of reaction expected. Use natural body movements that mimic the way the device should act. Do not use arbitrary or uncommon gestures.

Affordances

Affordances describe that an object’s function can be understood based on its properties. The doorbell extends outward, can be round or rectangular, and has a target touch size large enough for a finger to push. Its characteristics afford contact and pushing.

On mobile devices, physical keys that extend outward or are recessed inward afford pushing, rotating, or sliding. Keys that are grouped in proximity afford a common relationship, many times a polar functionality. For example, four-way keys afford directional scrolling while the center key affords selection.

Yes, affordances are just like other understandings of interactive controls and are learned. Very few of these are truly intuitive (meaning innately understandable) despite the common use of the term, and may vary by the user’s background, including regional or cul- tural differences.

Provide Constraints

Restrictions on behavior can be both natural and cultural. They can be both positive and negative, and they can prevent undesired results such as loss of data, or unnecessary state changes.

Our doorbell could only be pushed and not pulled (in fact, a style of older doorbell did require pulling a significant distance). The distance which the button could be offset is restricted by the mechanics of the device; it cannot be moved in any direction except along the z-axis.

Despite the small surface and touch size, the button can still be pressed down with a finger, an entire hand, or any object, even one much larger than the button’s surface. In this context, that lack of constraint was beneficial. A user doesn’t have to be entirely accurate using the tip of the finger to access the device (see Figure 10-1). Our trick-or-treater, with huge latex hands holding a sword, was still able to push the button down, allowing for a quick interaction. More commonly, the doorbell can be pushed by someone wearing mittens or by an elbow if the person is carrying groceries.

On mobile devices, however, it’s often necessary to define constraints on general interactive controls. Some constraints that should be considered involve:

Contact pressure' The ability to interact with a button can heavily depend on its physical size and its proximity to other buttons or hardware elements. With on-screen targets, you must carefully consider this when designing UIs that have these interactive controls. Small touch targets must have larger touch areas, and must not be too close to a raised bezel. Consider as well the use of other sensors, and how Kinesthetic Gestures can change the effective control size, such as for the card reader in Figure 10-2. Refer to the section "General Touch Interaction Guidelines" in Appendix D.

Use feedback properly. Unlike the doorbell’s immediate indicator of an action, an elevator call or floor button (both work the same, as they should) remains illuminated as a request- for-service, until it has been fulfilled. As an antipattern, crosswalk request buttons generally provide no feedback, or beep and illuminate as pressed. There is no assurance that the request was received, or is properly queued. On mobile devices, when we click, select an object, or move the device we expect an im- mediate response. With general interactive controls, feedback is experienced in multiple ways. A single object or entire image may change shape, size, orientation, color, or position. Devices that use accelerometers provide immediate feedback showing page flips, rotations, expansion, and sliding.

A growing number of devices today are using gestural interactive controls as the primary input method. We can expect smartphones, tablets, and game systems to have some level of these types of controls. Gestural interfaces have a unique set of guidelines that other interactive controls need not follow: Dan Saffer (Saffer 2009) points out five reasons to use interactive gestures: Patterns for General Interactive Controls 319

The patterns in this chapter describe how you can use general interactive controls to initiate various forms of interaction on mobile devices:

Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here.

If you want to add examples (and we occasionally do also) add them here.

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.

Time of contact

Axis of control

Size of control Use Feedback

Feedback describes the immediate perceived result of an interaction. It confirms that action took place and presents us with more information. Without feedback, the user may believe the action never took place, leading to frustration and repetitive input attempts. The pushed doorbell provides immediate audio feedback that can be heard from outside as well as inside the house. Illuminated doorbells also darken while being pressed, providing additional feedback to the user as well as confirmation in loud environments, for more soundproof houses, or for users with auditory deficits. Gestural Interactive Controls

Less cumbersome or visible hardware

More flexibility

More fun Patterns for General Interactive Controls

Press-and-Hold

Focus & Cursors

Other Hardware Keys

Accesskeys

Dialer

On-screen Gestures

Kinesthetic Gestures

Remote Gestures Discuss & Add

Examples

Make a new section