|

Size: 11920

Comment:

|

← Revision 33 as of 2011-12-13 19:39:41 ⇥

Size: 14840

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| {{{#!wiki yellow/solid IMAGES COMING: |

[[http://www.amazon.com/gp/product/1449394639/ref=as_li_tf_tl?ie=UTF8&tag=4ourthmobile-20&linkCode=as2&camp=217145&creative=399373&creativeASIN=1449394639|{{attachment:wiki-banner-book.png|Click here to buy from Amazon.|align="right"}}]] == The Big Tooter == High atop Mount Oread, in the picturesque city of Lawrence, Kansas, stands a whistle—a whistle with quite a history composed of tradition, controversy, and headache that started about a hundred years ago. The whistle is known as the “Big Tooter.” |

| Line 4: | Line 5: |

| I will disassemble a handset (probably the KRZR, as it's dead already) and photograph the vibrate motor. May also get the voice coil if it has one and can be seen. | March 25, 1912, 9:50 a.m.: a deafening shrill begins. For five earsplitting seconds the power plant steam whistle at the University of Kansas sounds. The sound is so loud it can be heard from one side of the city to the other. It’s the first time the whistle is used to signal the end of each hour of class time. |

| Line 6: | Line 7: |

| Screenshot of some voice command or text-to-voice in use (e.g. Google Voice Search with the "listening" screen). }}} |

According to the student newspaper The Daily Kansan, the whistle was not only used to replace the untimely and inconsistent ringing of bells with a standard schedule of marked time, but was also used to remind professors to end their lectures immediately. Prior to the whistle, too often professors would keep students past the 55 minutes of class time, causing them to be late for their next class. With the new sound system in place, even the chancellor had something to say. |

| Line 9: | Line 9: |

| == The Big Tooter == High atop Mount Oread, in the picturesque city of Lawrence, Kansas, stands a whistle. A whistle with quite a history composed of tradition, controversy, and headache that started about a hundred years ago. The whistle is known as the “Big Tooter”. March 25, 1912, 9:50 am. A deafening shrill begins. For five earsplitting seconds, the power plant steam whistle at the University of Kansas sounds. A sound so loud, it can be heard from one side of the city to the other. It’s the first time the whistle is used to signal the end of each hourly class time. According to the student newspaper, The Daily Kansan, the whistle was not only used to replace untimely and inconsistent ringing of bells with a standard schedule of marked time, it was also used to remind professors to end their lectures immediately. Prior to the whistle, too often, professors would keep students past the 55 minutes of class time, causing them to be late for their next class. With the new sound system in place, even the Chancellor had something to say. “If the instructor isn’t through when the whistle blows,” said KU Chancellor Frank Strong to the student body, “get up and go.” |

“If the instructor isn’t through when the whistle blows,” said KU Chancellor Frank Strong to the student body, “get up and go.” |

| Line 19: | Line 12: |

| For the past 100 years, the Big Tooter has been the deafening reminder to faculty and students about punctuality and when to cover their ears. I can say I was one of those students too, who would purposely alter my walk to class to avoid that sound at its loudest range. But, despite the fact that the steam whistle was excruciatingly loud, it served its purpose as an audio alert. So unique, it was never misunderstood, so reliable it is always trusted. | For the past 100 years, the Big Tooter has been the deafening reminder to faculty and students about punctuality and when to cover their ears. I can say I, too, was one of those students who would purposely alter my walk to class to avoid that sound at its loudest range. But despite the fact that the steam whistle was excruciatingly loud, it served its purpose as an audio alert. It was so unique that it was never misunderstood, and is so reliable that it is always trusted. |

| Line 22: | Line 15: |

| From the example above, we see that people can benefit from specific sounds that are associated with contextual meaning. Using audition in the mobile space can take advantage of this very important concept for the following reasons: * Our mobile devices may be placed and used anywhere. In these constantly changing environmental contexts, the user is surrounded by external stimuli that are constant fighting with their attention capacity. |

From the preceding example we see that people can benefit from specific sounds that are associated with contextual meaning. Using audition in the mobile space can take advan- tage of this very important concept, for the following reasons: * Our mobile devices may be placed and used anywhere. In these constantly chang- ing environmental contexts, users are surrounded by external stimuli which are constantly fighting for their attention. |

| Line 25: | Line 18: |

| * Using audition together with other sensory cues can help reinforce and strengthen their understanding of the interactive context. * The user may have impaired vision -- either due to a physiological deficit or from transient environmental or behavioral conditions -- thus requiring additional auditory feedback to assist them their needs. * The user may require auditory cues to refocus their attention on something needing immediate action. |

* Using audition together with other sensory cues can help to reinforce and strengthen users’ understanding of the interactive context. * The user may have impaired vision—either due to a physiological deficit or from transient environmental or behavioral conditions—thus requiring additional auditory feedback to assist him in his needs. * The user may require auditory cues to refocus his attention on something needing immediate action. |

| Line 29: | Line 22: |

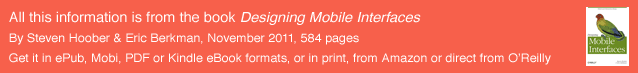

{{attachment:AudioIntro-Volume.png|Control of the volume level is usually still performed by hardware keys, due to the importance. This makes it an interesting control as it is always pseudo-modal. The user enters the mode by using the keys, which loads a screen, layer or other widget to indicate the volume change. Related controls, silence and vibrate, are associated with this mode as well.}} |

[[http://www.flickr.com/photos/shoobe01/6501542443/|{{attachment:AudioIntro-Volume.png|Figure 12-1. Control of volume level is usually still performed by hardware keys due to its importance. This makes it an interesting control as it is always pseudomodal. The user enters the mode by using the keys, which loads a screen, layer, or other widget to indicate the volume change. Related controls, silence and vibrate, are associated with this mode as well.}}]] |

| Line 34: | Line 26: |

| Audible sounds and notifications have become so common-place today that we have learned to understand their meaning and quickly decide whether or not we need to attend to them in a particular context. |

Audible sounds and notifications have become so commonplace today that we have learned to understand their meaning and quickly decide whether we need to attend to them in a particular context. |

| Line 38: | Line 29: |

| Audible warnings indicate a presence of danger and action is required for one’s safety. These sounds have loud decibels (up to 130 dB) and some use dual frequencies to quickly distinguish themselves amongst all of the external noise that may be occurring at that time. Most often these warning are used with visual outputs as well. | Audible warnings indicate both a presence of danger and the fact that action is required to ensure one’s safety. These sounds have loud decibels (up to 130 dB) and some use dual frequencies to quickly distinguish themselves from other external noise that may be occurring at that time. Most often these warning are used with visual outputs as well. Examples of warning sounds include: |

| Line 46: | Line 37: |

| Line 47: | Line 39: |

| Alerts are not used to signal immediate action due to safety. Instead they are used to capture your attention to indicate an action may be required or let you know an action has completed. Alerts can be a single sound, one that is repetitive over a period of time and can change in frequency. | Alerts are not used to signal immediate action due to safety. Instead, they are used to capture your attention to indicate that an action may be required or to let you know an action has completed. Alerts can be a single sound, one that is repetitive over a period of time and can change in frequency. Mobile alerts are quite common. They must be distinguishable and never occur at the same time as others. When appropriate, use visual indicators to reinforce their meanings. Use a limited number of alert sounds; otherwise, the user will not retain their contextual meaning. Examples of alert and notification sounds include: * The beep of a metal detector when it detects something under the sand at the beach * The chime of a doorbell * The ring of an elevator upon arrival * The beep of a crosswalk indicator * The sound of a device being turned on or off * The sound of a voicemail being received on your mobile device * A low-battery-level notification * A sound to indicate a meeting reminder |

| Line 49: | Line 50: |

| Mobile alerts are quite common. They must be distinguishable and never occur at the same time as others. When appropriate, use visual indicators to reinforce their meanings. Use a limited number of alert sounds, otherwise the user will not retain its contextual meaning. * A metal detector sounding when combing the beach. * A sound of doorbell * An arrived elevator * A beeping crosswalk indicator. * The sound of a device being turned on or off. * A voicemail received on your mobile device * A low battery level notification. * A sound to indicate a meeting reminder. === Error tones === Error tones are a form of immediate or slightly delayed feedback based on user input. These errors must occur in the current context. Examples of mobile error tones are often buzzers to indicate: * Entering the wrong choice or key during input. * A failure of loading or synching. |

=== Error Tones === Error tones are a form of immediate or slightly delayed feedback based on user input. These errors must occur in the current context. Mobile error tones are often buzzers to indicate: * The wrong choice or key was entered during input. * A loading or synching process failed. |

| Line 63: | Line 56: |

| Voice notifications can be used as reminders when you are not holding your device, as well as notifications of incorrect and undetectable input through voice, touch or keypad. Use syntax that makes it clear what is being communicated. Keep the voice notification messages short and simple. * Reminder to take your medication * Turn by turn directions * A notification to repeat your last input because it wasn’t understood by the system. |

Voice notifications can be used as reminders when you are not holding your device, as well as notifications of incorrect and undetectable input through voice, touch, or keypad. Use syntax that makes it clear what is being communicated. Keep the voice notification messages short and simple. Examples of voice notifications include: * A reminder to take your medication * Turn-by-turn directions * A request to repeat your last input because the system didn’t understand it |

| Line 69: | Line 62: |

| Feedback tones occur immediate after pressing a key or button such as the dialer. They confirm that an action has been completed. These may appear as clicks or single tones. * Entering phone numbers on the dialer. * Entering characters on the keyboard. * Holding down a key for an extended time to access an application like voicemail. * Pressing a button to submit user generated data. * Selecting incremental data on a tape or slider selector. |

Feedback tones occur immediately after you press a key or button such as a dialer. They confirm that an action has been completed. These may appear as clicks or single tones. Feedback tones can occur when you: * Enter phone numbers on a dialer * Enter characters on a keyboard * Hold down a key for an extended time to access an application such as voicemail * Press a button to submit user-generated data * Select incremental data on a tape or slider selector |

| Line 76: | Line 69: |

| == Audio Guidelines to Consider in the Mobile Space == | == Audio Guidelines in the Mobile Space == |

| Line 78: | Line 71: |

| === Signal to Noise Ratio Guidelines === * Signal to Noise Ratio (S/N) is calculated by subtracting the noise decibels from the speech decibels. * To successfully communicate voice messages in background noise, the speech level should exceed the noise level by at least 6 decibels (dB) (Bailey, 1996). * A user’s audio recall is enhanced when grammatical pauses are inserted in synthetic speech (Nooteboom, 1983). * Synthetic speech is less intelligible in the presence of background noise at a 10 dB S/N ratio. * When the noise level is +12dB to the signal level, the consonants m,n, d,g, b, v, and z are confused with one another When the noise level is +18dB to the signal level, all consonants are confused with one another. (Kryter, 1972). |

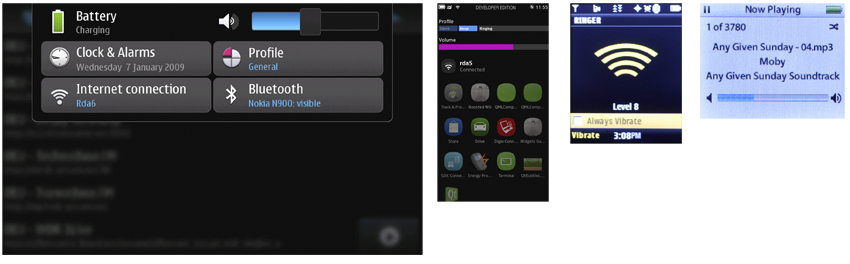

[[http://www.flickr.com/photos/shoobe01/6501544267/|{{attachment:AudioIntro-Speech.png|Figure 12-2. Voice inputs can help users in situations where visibility is limited or nonexistent, but they have to be part of a complete voice UI. Don’t just perform one or two steps of a process and then require the user to read and provide feedback on the screen.}}]] === Signal-to-Noise Ratio Guidelines === People will use their mobile devices in any environment and context. In many situations, they will rely on the device’s voice input and output functions during use. See Figure 12-2. But, whether the person is inside or outside, her ability to hear certain speech decibels apart from other external noises can be quite challenging. Here are some guidelines to follow when designing mobile devices that rely on speech output and input: * Signal-to-noise ratio (S/N) is calculated by subtracting the noise decibels from the speech decibels. * To successfully communicate voice messages in background noise, the speech level should exceed the noise level by at least 6 decibels (dB) (Bailey 1996). * A user’s audio recall is enhanced when grammatical pauses are inserted in synthetic speech (Nooteboom 1983). * Synthetic speech is less intelligible in the presence of background noise at a 10 dB S/N. * When the noise level is +12 dB to the signal level, the consonants m, n, d, g, b, v, and z are confused with one another. * When the noise level is +18 dB to the signal level, all consonants are confused with one another (Kryter 1972). |

| Line 86: | Line 83: |

| * Words in context are recognized more when they are used in a sentence rather than being isolated especially during background noise. * Word recognition increases when using common and familiar words to the user. * Word recognition will increases if the user is given prior knowledge of the sentence topic. == Audio accessibility in the mobile space == When designing for mobile as with any device, always consider your users, their needs, and their abilities. Many people who use mobile devices experience visual impairments. We need to create an enriching experience for them as well. |

In addition to the signal-to-noise guidelines in the preceding section, you must under- stand how users recognize speech. The following guidelines will assist you when designing [[Voice Notifications]]: * Words in context are recognized more when they are used in a sentence than when they are isolated, especially in environments with background noise. * Word recognition increases when the words are common and familiar to the user. * Word recognition increases if the user is given prior knowledge of the sentence topic. |

| Line 92: | Line 88: |

| Recently, companies are addressing accessibility needs as standard functions in mobile devices. Before this, visually impaired users were forced to purchase supplemental screen reader software that work on only a few compatible devices and browsers. These are quite expensive starting around $200-$500. | == Audio Accessibility in the Mobile Space == When designing for mobile, as with any device always consider your users, their needs, and their abilities. Many people who use mobile devices experience visual impairments. We need to create an enriching experience for them as well. Recently companies have been addressing accessibility needs as standard functions in mobile devices. Before this, visually impaired users were forced to purchase supplemental screen reader software that worked on only a few compatible devices and browsers. These are quite expensive, starting at around $200 to $500. |

| Line 95: | Line 95: |

| * Apple has integrated VoiceOver into their iPhone, iPod, iPad devices. For more information on Apple’s accessibility commitment, visit their site at http://www.apple.com/accessibility. * Companies such as Code Factory have created Mobile Speak, a screen reader for multi OS devices. See their site at http://www.codefactory.es/en. * For additional information on accessibility and technology assistance for the visual impaired, I recommend viewing the American Foundation for the Blind’s website. www.afb.org. * http://www.accesswireless.org/Home.aspx * http://www.mobileaccessibility.info/ * http://www.nokiaaccessibility.com/ |

Here are useful resources on audio accessibility. Included is information on types of as- sistance technologies companies are using in mobile devices today. * Apple has integrated VoiceOver, a screen access technology, into its iPhone, iPod, and iPad devices. For more information on Apple’s accessibility commitment, visit http://www.apple.com/accessibility. * Companies such as Code Factory have created Mobile Speak, a screen reader for multi-OS devices. See Code Factory’s site at http://www.codefactory.es/en. * For additional information on accessibility and technology assistance for the visually impaired, we recommend viewing the American Foundation for the Blind’s website, http://www.afb.org. * We also recommend the following websites geared toward accessibility of mobile devices for all: * http://www.accesswireless.org/Home.aspx * http://www.mobileaccessibility.info/ * http://www.nokiaaccessibility.com/ |

| Line 104: | Line 105: |

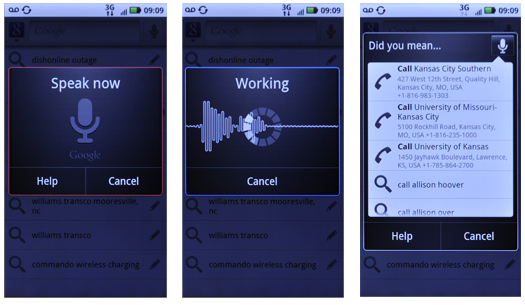

| Depending on our users needs, their sensory limitations, and the environment in which mobile is used, vibration feedback can provide another powerful sensation to communicate meaning. | [[http://www.flickr.com/photos/shoobe01/6501545515/in/photostream/|{{attachment:AudioIntro-Motor.png|Figure 12-3. Vibrate on most devices is coarse, and is provided by a simple motor with an off-center weight. Here, it is the silver cylinder between the camera and the external screen; the motor is mostly covered by a ribbon cable. It is mounted into a rubber casing, to avoid vibrating the phone to pieces, but this also reduces the fidelity of specific vibrate patterns, if you were to try to use it for that purpose. The figure on the right shows the motor assembly on its own.}}]] |

| Line 106: | Line 107: |

| Since our largest organ in our body is the skin that responds to pressure, we can sense vibrations anywhere on our body. Whether we are holding our device in our hands, or carrying it in our pocket, we can feel the haptic output our devices produce. | Depending on our users’ needs, their sensory limitations, and the environment in which mobile is used vibration feedback can provide another powerful sensation to communicate meaning. Since the largest organ in our body is our skin, which responds to pressure, we can sense vibrations anywhere on our body. Whether we are holding our device in our hands or carrying it in our pocket, we can feel the haptic output our devices produce. When designing mobile devices that incorporate haptics, be familiar with the following information: |

| Line 108: | Line 113: |

| * The touch sense is able to respond to stimuli every bit as quickly as the auditory sense and even faster than the visual sense (Bailey, 1996). * In high noise level areas or where visual and auditory detection is limited, haptics can provide an advantage. |

* The touch sense can respond to stimuli just as quickly as the auditory sense, and can respond even faster than the visual sense (Bailey 1996). * In high-noise-level areas or where visual and auditory detection is limited, haptics can provide an advantage. |

| Line 111: | Line 117: |

| * A localized vibration on key entry or button push. * A ringtone set to vibrate. *A device vibration to indicate an in-application response, such as playing an interactive game i.e, the phone might vibrate when a fish is caught, or when the car your steering accidently crashes. |

Many mobile devices today use haptics to communicate a direct response to an action: * A localized vibration on key entry or button push * A ring tone set to vibrate * A device vibration to indicate an in-application response, such as playing an interactive game (i.e., the phone might vibrate when a fish is caught, or when the car you’re steering accidentally crashes) |

| Line 115: | Line 123: |

| * Using haptics can quicken the process of draining battery life. Provide the option to turn haptic feedback on and off. | Using haptics appropriately is a great way to provide users with additional sensory feedback. However, you still need to be aware that: * Using haptics can quicken the process of draining battery life. Provide the option to turn haptic feedback on and off. |

| Line 117: | Line 126: |

{{attachment:AudioIntro-motor.png|Vibrate on most devices is coarse, and provided by a simple motor with an off-center weight. Here, it is the silver cylinder between the camera and the external screen; the motor is mostly covered by a ribbon cable. It is mounted into a rubber casing, to avoid vibrating the phone to pieces, but this also reduces the fidelity of specific vibrate patterns, if you were to try to use it for that purpose. To the right, I have removed the motor assembly for clarity.}} |

|

| Line 122: | Line 129: |

| Using Audio & Vibration control appropriately provides users methods to engage with the device other than relying on their visual sense. The following patterns in the chapter will be discussed. * [[Tones]]– Non-verbal auditory tones must be used to provide feedback or alert users to conditions or events, but must not becoming confusing, lost in the background or so frequent that critical alerts are disregarded. * [[Voice Input]] – A method must be provided to control some or all of the functions of the mobile device, or provide text input, without handling the device. * [[Voice Readback]] – Mobile devices must be able to read text displayed on the screen, so it can be accessed and understood by users who cannot use or read the screen. * [[Voice Notifications]] – Provides users conditions, alarms, alerts and other contextually-relevant or timebound content without reading the device screen. * [[Haptic Output]] – Vibrating alerts and tactile feedback should be provided to help assure perception and emphasize the nature of UI mechanisms. |

Using audio and vibration control appropriately provides users with methods to engage with the device other than relying on their visual sense. These controls can be very effec- tive when users may be at a distance from their device, or are unable to directly look at the display but require alerts, feedback, or notifications. In other situations, a visually im- paired user may require these controls because they provide accessibility. We will discuss the following patterns in this chapter: ''[[Tones]]'' Nonverbal auditory tones must be used to provide feedback or alert users to conditions or events, but must not become confusing, lost in the background, or so fre- quent that critical alerts are disregarded. See Figure 12-1. ''[[Voice Input]]'' A method must be provided to control some or all of the functions of the mobile device, or provide text input, without handling the device. See Figure 12-2. ''[[Voice Readback]]'' Mobile devices must be able to read text displayed on the screen, so it can be accessed and understood by users who cannot use or read the screen. ''[[Voice Notifications]]'' Mobile devices must provide users with conditions, alarms, alerts, and other contex- tually relevant or time-bound content without reading the device screen. ''[[Haptic Output]]'' Vibrating alerts and tactile feedback should be provided to help ensure perception and emphasize the nature of UI mechanisms. See Figure 12-3. ------- = Discuss & Add = Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here. == Examples == If you want to add examples (and we occasionally do also) add them here. == Make a new section == Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account. |

The Big Tooter

High atop Mount Oread, in the picturesque city of Lawrence, Kansas, stands a whistle—a whistle with quite a history composed of tradition, controversy, and headache that started about a hundred years ago. The whistle is known as the “Big Tooter.”

March 25, 1912, 9:50 a.m.: a deafening shrill begins. For five earsplitting seconds the power plant steam whistle at the University of Kansas sounds. The sound is so loud it can be heard from one side of the city to the other. It’s the first time the whistle is used to signal the end of each hour of class time.

According to the student newspaper The Daily Kansan, the whistle was not only used to replace the untimely and inconsistent ringing of bells with a standard schedule of marked time, but was also used to remind professors to end their lectures immediately. Prior to the whistle, too often professors would keep students past the 55 minutes of class time, causing them to be late for their next class. With the new sound system in place, even the chancellor had something to say.

“If the instructor isn’t through when the whistle blows,” said KU Chancellor Frank Strong to the student body, “get up and go.”

The Big Tooter Today

For the past 100 years, the Big Tooter has been the deafening reminder to faculty and students about punctuality and when to cover their ears. I can say I, too, was one of those students who would purposely alter my walk to class to avoid that sound at its loudest range. But despite the fact that the steam whistle was excruciatingly loud, it served its purpose as an audio alert. It was so unique that it was never misunderstood, and is so reliable that it is always trusted.

The Importance of Audition

From the preceding example we see that people can benefit from specific sounds that are associated with contextual meaning. Using audition in the mobile space can take advan- tage of this very important concept, for the following reasons:

- Our mobile devices may be placed and used anywhere. In these constantly chang- ing environmental contexts, users are surrounded by external stimuli which are constantly fighting for their attention.

- The device may be out of our field of view or range of vision, but not our auditory sensitivity levels.

- Using audition together with other sensory cues can help to reinforce and strengthen users’ understanding of the interactive context.

- The user may have impaired vision—either due to a physiological deficit or from transient environmental or behavioral conditions—thus requiring additional auditory feedback to assist him in his needs.

- The user may require auditory cues to refocus his attention on something needing immediate action.

Auditory Classifications

Audible sounds and notifications have become so commonplace today that we have learned to understand their meaning and quickly decide whether we need to attend to them in a particular context.

Warnings

Audible warnings indicate both a presence of danger and the fact that action is required to ensure one’s safety. These sounds have loud decibels (up to 130 dB) and some use dual frequencies to quickly distinguish themselves from other external noise that may be occurring at that time. Most often these warning are used with visual outputs as well. Examples of warning sounds include:

- Railroad train crossings

- Emergency vehicle sirens

- Fire alarms

- Tornado sirens

- Emergency broadcast interrupts

- Fog horns

- Vehicle horns

Alerts and Notifications

Alerts are not used to signal immediate action due to safety. Instead, they are used to capture your attention to indicate that an action may be required or to let you know an action has completed. Alerts can be a single sound, one that is repetitive over a period of time and can change in frequency. Mobile alerts are quite common. They must be distinguishable and never occur at the same time as others. When appropriate, use visual indicators to reinforce their meanings. Use a limited number of alert sounds; otherwise, the user will not retain their contextual meaning. Examples of alert and notification sounds include:

- The beep of a metal detector when it detects something under the sand at the beach

- The chime of a doorbell

- The ring of an elevator upon arrival

- The beep of a crosswalk indicator

- The sound of a device being turned on or off

- The sound of a voicemail being received on your mobile device

- A low-battery-level notification

- A sound to indicate a meeting reminder

Error Tones

Error tones are a form of immediate or slightly delayed feedback based on user input. These errors must occur in the current context. Mobile error tones are often buzzers to indicate:

- The wrong choice or key was entered during input.

- A loading or synching process failed.

Voice Notifications

Voice notifications can be used as reminders when you are not holding your device, as well as notifications of incorrect and undetectable input through voice, touch, or keypad. Use syntax that makes it clear what is being communicated. Keep the voice notification messages short and simple. Examples of voice notifications include:

- A reminder to take your medication

- Turn-by-turn directions

- A request to repeat your last input because the system didn’t understand it

Feedback Tones

Feedback tones occur immediately after you press a key or button such as a dialer. They confirm that an action has been completed. These may appear as clicks or single tones. Feedback tones can occur when you:

- Enter phone numbers on a dialer

- Enter characters on a keyboard

- Hold down a key for an extended time to access an application such as voicemail

- Press a button to submit user-generated data

- Select incremental data on a tape or slider selector

Audio Guidelines in the Mobile Space

Signal-to-Noise Ratio Guidelines

People will use their mobile devices in any environment and context. In many situations, they will rely on the device’s voice input and output functions during use. See Figure 12-2. But, whether the person is inside or outside, her ability to hear certain speech decibels apart from other external noises can be quite challenging. Here are some guidelines to follow when designing mobile devices that rely on speech output and input:

- Signal-to-noise ratio (S/N) is calculated by subtracting the noise decibels from the speech decibels.

- To successfully communicate voice messages in background noise, the speech level should exceed the noise level by at least 6 decibels (dB) (Bailey 1996).

- A user’s audio recall is enhanced when grammatical pauses are inserted in synthetic speech (Nooteboom 1983).

- Synthetic speech is less intelligible in the presence of background noise at a 10 dB S/N.

- When the noise level is +12 dB to the signal level, the consonants m, n, d, g, b, v, and z are confused with one another.

- When the noise level is +18 dB to the signal level, all consonants are confused with one another (Kryter 1972).

Speech Recognition Guidelines

In addition to the signal-to-noise guidelines in the preceding section, you must under- stand how users recognize speech. The following guidelines will assist you when designing Voice Notifications:

- Words in context are recognized more when they are used in a sentence than when they are isolated, especially in environments with background noise.

- Word recognition increases when the words are common and familiar to the user.

- Word recognition increases if the user is given prior knowledge of the sentence topic.

Audio Accessibility in the Mobile Space

When designing for mobile, as with any device always consider your users, their needs, and their abilities. Many people who use mobile devices experience visual impairments. We need to create an enriching experience for them as well.

Recently companies have been addressing accessibility needs as standard functions in mobile devices. Before this, visually impaired users were forced to purchase supplemental screen reader software that worked on only a few compatible devices and browsers. These are quite expensive, starting at around $200 to $500.

Accessibility Resources

Here are useful resources on audio accessibility. Included is information on types of as- sistance technologies companies are using in mobile devices today.

Apple has integrated VoiceOver, a screen access technology, into its iPhone, iPod, and iPad devices. For more information on Apple’s accessibility commitment, visit http://www.apple.com/accessibility.

Companies such as Code Factory have created Mobile Speak, a screen reader for multi-OS devices. See Code Factory’s site at http://www.codefactory.es/en.

For additional information on accessibility and technology assistance for the visually impaired, we recommend viewing the American Foundation for the Blind’s website, http://www.afb.org.

- We also recommend the following websites geared toward accessibility of mobile devices for all:

The Importance of Vibration

Depending on our users’ needs, their sensory limitations, and the environment in which mobile is used vibration feedback can provide another powerful sensation to communicate meaning.

Since the largest organ in our body is our skin, which responds to pressure, we can sense vibrations anywhere on our body. Whether we are holding our device in our hands or carrying it in our pocket, we can feel the haptic output our devices produce.

When designing mobile devices that incorporate haptics, be familiar with the following information:

- Haptic sense can provide support when the visual and auditory channels are overloaded.

- The touch sense can respond to stimuli just as quickly as the auditory sense, and can respond even faster than the visual sense (Bailey 1996).

- In high-noise-level areas or where visual and auditory detection is limited, haptics can provide an advantage.

Common Haptic Outputs on Mobile Devices

Many mobile devices today use haptics to communicate a direct response to an action:

- A localized vibration on key entry or button push

- A ring tone set to vibrate

- A device vibration to indicate an in-application response, such as playing an interactive game (i.e., the phone might vibrate when a fish is caught, or when the car you’re steering accidentally crashes)

Haptic Concerns

Using haptics appropriately is a great way to provide users with additional sensory feedback. However, you still need to be aware that:

- Using haptics can quicken the process of draining battery life. Provide the option to turn haptic feedback on and off.

- Use haptics when appropriate. Too much might reduce the user’s attention to the stimuli and ignore the response.

Patterns for Audio & Vibration

Using audio and vibration control appropriately provides users with methods to engage with the device other than relying on their visual sense. These controls can be very effec- tive when users may be at a distance from their device, or are unable to directly look at the display but require alerts, feedback, or notifications. In other situations, a visually im- paired user may require these controls because they provide accessibility. We will discuss the following patterns in this chapter:

- Nonverbal auditory tones must be used to provide feedback or alert users to conditions or events, but must not become confusing, lost in the background, or so fre- quent that critical alerts are disregarded. See Figure 12-1.

- A method must be provided to control some or all of the functions of the mobile device, or provide text input, without handling the device. See Figure 12-2.

- Mobile devices must be able to read text displayed on the screen, so it can be accessed and understood by users who cannot use or read the screen.

- Mobile devices must provide users with conditions, alarms, alerts, and other contex- tually relevant or time-bound content without reading the device screen.

- Vibrating alerts and tactile feedback should be provided to help ensure perception and emphasize the nature of UI mechanisms. See Figure 12-3.

Discuss & Add

Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here.

Examples

If you want to add examples (and we occasionally do also) add them here.

Make a new section

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.